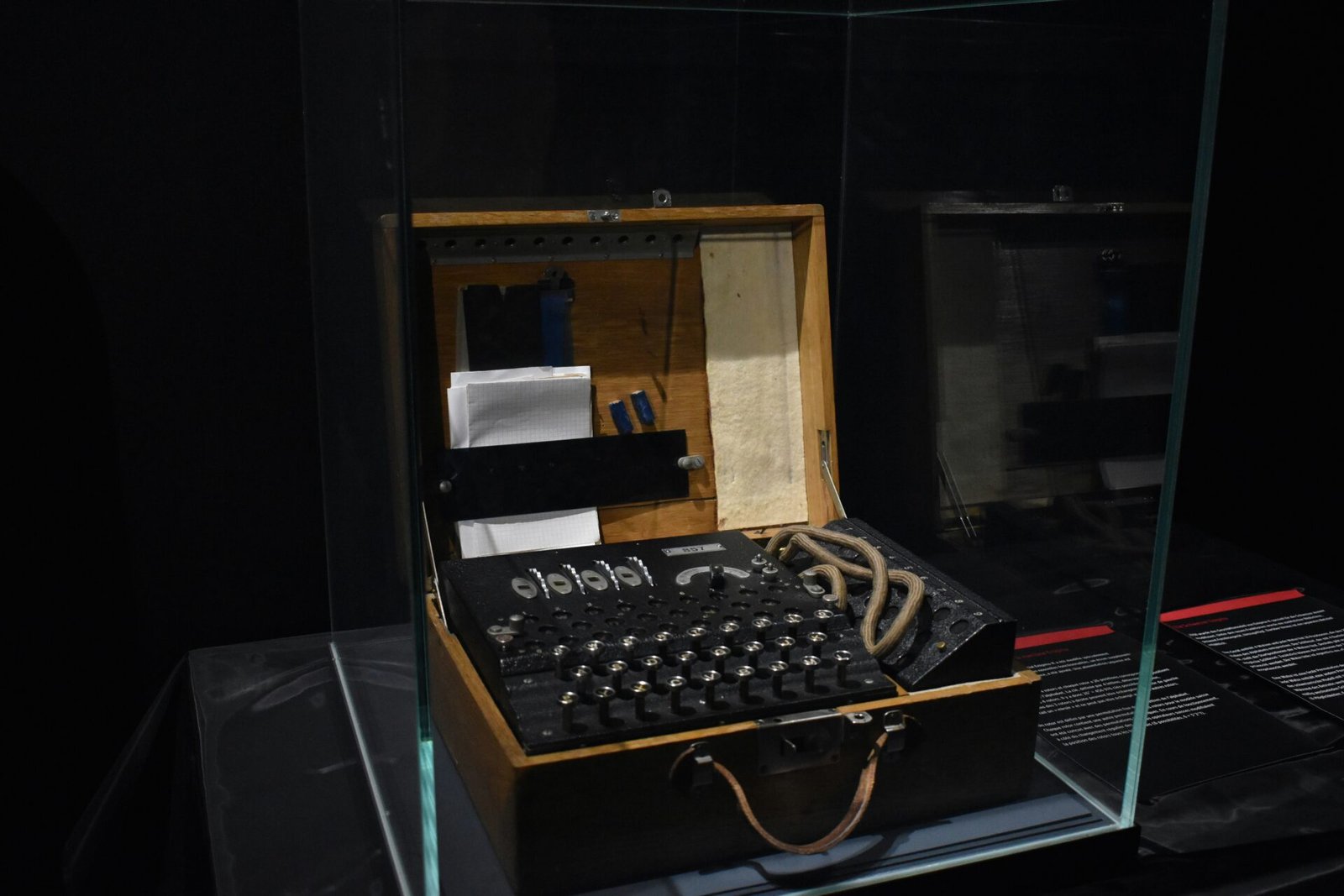

Introduction to Microsoft Copilot and Its Vulnerabilities

Microsoft Copilot is an innovative AI-powered tool designed to enhance productivity by seamlessly integrating into various Microsoft applications, such as Word, Excel, and Teams. By leveraging advanced machine learning algorithms, Copilot assists users in performing tasks more efficiently, thereby optimizing workflows and enabling organizations to harness the full potential of their data and resources. The increasing incorporation of AI in workplace environments underscores the growing reliance on such technologies to streamline operations and foster collaboration.

However, the adoption of Microsoft Copilot has brought to light significant concerns regarding its security vulnerabilities. Michael Bargury, a prominent security researcher, addressed these pressing issues during his presentation at the Black Hat 2024 conference. He outlined potential exploit methods that could put sensitive organizational data at risk, emphasizing the importance of thoroughly examining these AI tools for security flaws. Bargury’s insights are vital as they not only highlight vulnerabilities but also underscore the need for organizations to remain vigilant when deploying AI solutions within their infrastructure.

The discussion surrounding Microsoft Copilot’s vulnerabilities is particularly significant given the tool’s wide adoption across corporate environments. As organizations increasingly turn to AI for improved productivity, the potential for security breaches becomes a paramount concern. It is crucial for stakeholders to assess the cybersecurity implications of integrating AI applications like Copilot. This analysis ultimately aims to balance the benefits of enhanced productivity with the necessity for robust security measures. Understanding the vulnerabilities within Microsoft Copilot is essential for fostering a secure digital landscape while leveraging the advantages of AI-driven tools.

Demonstration of Bypass Techniques

During Black Hat 2024, Michael Bargury presented a compelling array of 15 distinct methods designed to exploit vulnerabilities within GitHub Copilot’s security architecture. Each bypass technique showcased not only highlighted inherent design flaws but also illuminated the potential risks associated with the handling of sensitive corporate data. The presentation meticulously outlined these methods, providing context and validation for each approach.

One key method discussed involved exploiting insecure default settings inherent to the Copilot system. Many configurations were found to be set up in a manner that permitted excessive permissions, ultimately allowing unauthorized access to sensitive data. This flaw illustrates how corporations, in their effort to streamline operations, may inadvertently expose themselves to external threats by neglecting critical security configurations.

Another technique centered on manipulating the excessive permissions granted to plugins. Bargury demonstrated how many plugins, often viewed as tools to enhance functionality, were operating with privileges far exceeding their intended use. By taking advantage of these over-permissions, attackers could potentially gain access to data that should have remained protected. The implications of such practices raise significant concerns about the integrity of corporate data security and regulatory compliance.

Furthermore, several methods employed social engineering tactics, which revealed how human factors contribute to the overall vulnerability of a system. Bargury’s demonstrations underscored the importance of training and awareness within organizations to mitigate risks associated with user interaction with AI tools like Copilot.

Each of the methods presented was systematically validated through real-world scenarios, demonstrating a tangible threat to enterprises relying on Copilot for development tasks. These practical insights serve as a call to action for organizations to reassess their security measures and consider the underlying flaws within their technology stack as highlighted by Bargury’s exploration during the conference.

The Role of Copilothunter in Identifying Vulnerabilities

Copilothunter serves as a pivotal tool in the realm of cybersecurity, particularly for organizations utilizing Copilot applications. This innovative reconnaissance and exploitation tool specializes in identifying vulnerabilities within publicly accessible Copilot services. By employing advanced fuzzing techniques, Copilothunter systematically probes applications to reveal potential weaknesses that could be exploited by malicious actors.

The methodology behind Copilothunter is grounded in the principles of generative AI, which are harnessed to effectively harvest sensitive corporate data. Unlike traditional vulnerability assessments, Copilothunter takes a more dynamic approach to data extraction. It generates custom queries designed to stress-test application inputs and discover hidden vulnerabilities that may not be evident through standard testing protocols. This capability enables the tool to reveal staggering amounts of data in a manner that is both efficient and comprehensive.

During investigations, Copilothunter has demonstrated its effectiveness in unearthing significant troves of sensitive information, ranging from credentials and internal communications to proprietary business strategies. The tool’s capacity to identify these vulnerabilities presents substantial implications for organizations relying on Copilot services, especially as they become more integrated into everyday workflows.

Organizations must recognize that the proliferation of Copilot applications introduces new attack vectors that could jeopardize their security posture. By leveraging tools such as Copilothunter, businesses can proactively identify and remediate weaknesses before they can be exploited. This emphasizes the need for continuous vigilance and adaptation in security measures, ensuring that sensitive data remains protected in an increasingly complex digital landscape.

Recommendations and Best Practices for Securing Copilot Deployments

As organizations increasingly adopt Microsoft Copilot for enhanced productivity, it is crucial to implement robust security measures to mitigate potential exploits, such as those highlighted in Michael Bargury’s presentation at Black Hat 2024. By proactively addressing vulnerabilities, organizations can safeguard sensitive corporate data while taking advantage of AI-assisted capabilities.

To begin with, organizations should review and adjust the default settings associated with Microsoft Copilot. Out-of-the-box configurations may not provide sufficient security controls tailored to specific operational needs, which necessitates customization according to industry standards and organizational requirements.

Another essential practice involves monitoring permissions for plugins used within Copilot. Organizations often overlook the breadth of third-party integrations that can access sensitive data. Conducting a thorough evaluation of these plugins ensures that access is granted only to trusted sources and necessary functionalities. Regular audits of permissions will help identify unauthorized access and potential security threats.

Furthermore, enhancing data loss prevention (DLP) strategies is imperative. Organizations should implement comprehensive DLP policies that account for the unique risks associated with AI tools like Copilot. This can include restricting the sharing of sensitive information and setting thresholds for data usage that trigger alerts for further investigation.

Regular security audits play a vital role in identifying and remediating vulnerabilities within Copilot deployments. These audits should encompass not only the software configurations but also the data handling practices of end-users. Additionally, conducting employee training sessions will empower staff to recognize potential exploits and respond appropriately. Awareness of the pitfalls associated with AI tools can significantly reduce the likelihood of data breaches.

In conclusion, by embracing these recommendations and best practices, organizations can effectively mitigate risks associated with Microsoft Copilot. A proactive security posture, supported by continuous monitoring and employee education, is essential in ensuring the safe utilization of AI technologies within the corporate environment.